Despite year-old promises to fix its “Up Next” content recommendation system, YouTube is still suggesting conspiracy videos, hyperpartisan and misogynist videos, pirated videos, and content from hate groups following common news-related searches.

Erik Blad for BuzzFeed News

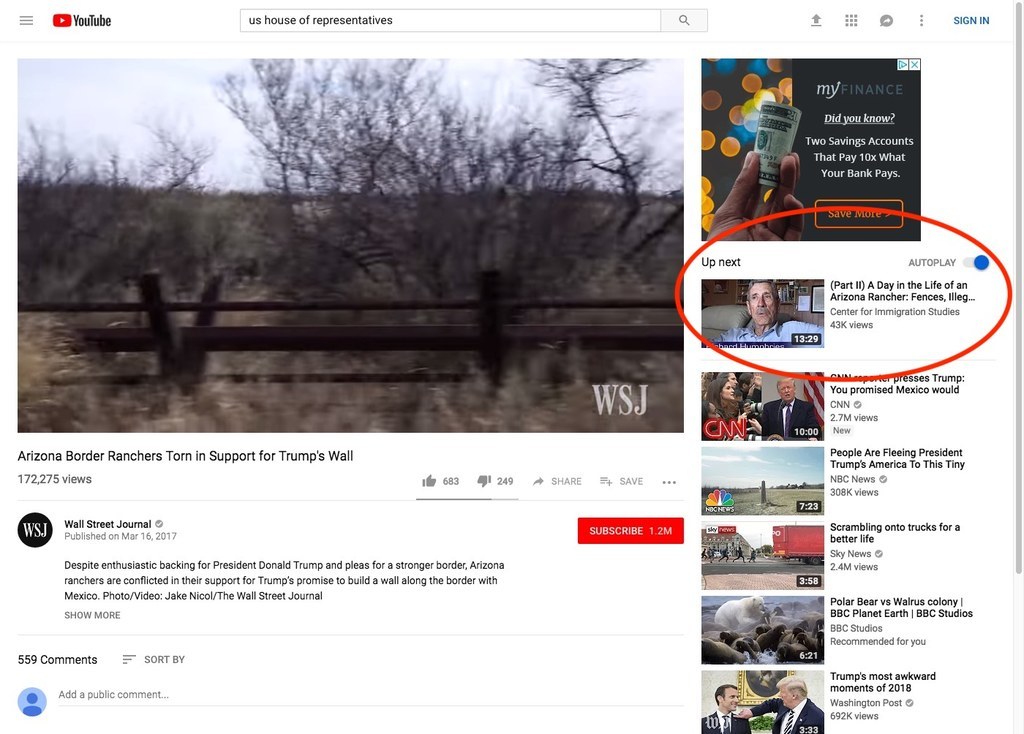

How many clicks through YouTube’s “Up Next” recommendations does it take to go from an anodyne PBS clip about the 116th United States Congress to an anti-immigrant video from a designated hate organization? Thanks to the site’s recommendation algorithm, just nine.

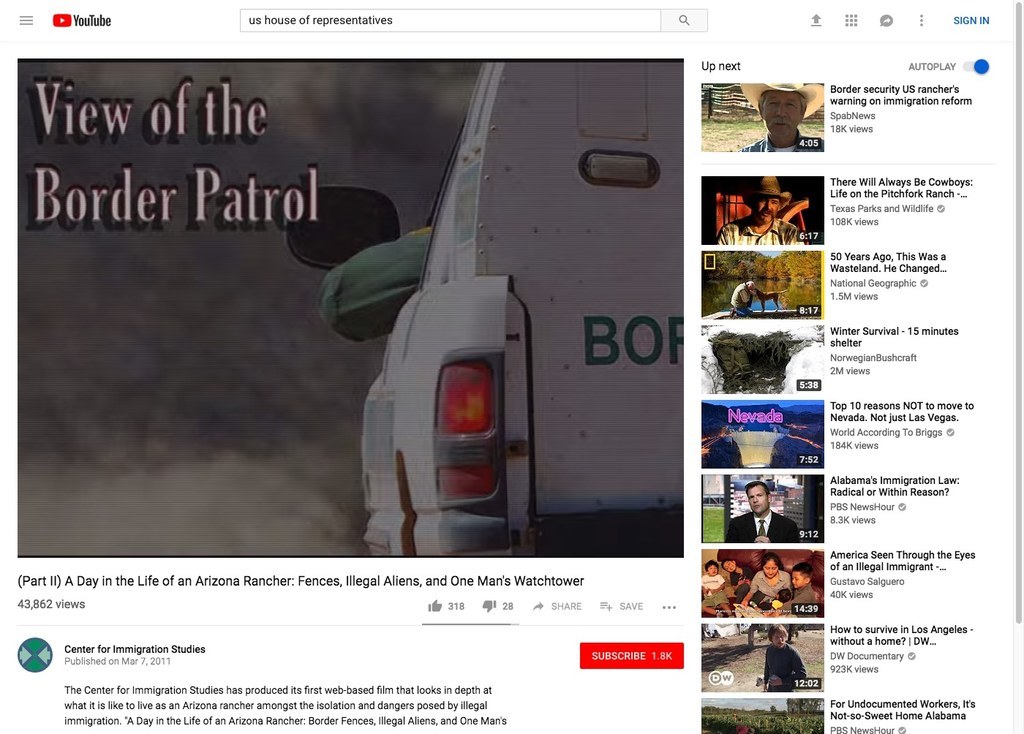

The video in question is “A Day in the Life of an Arizona Rancher.” It features a man named Richard Humphries recalling an incident in which a crying woman begged him not to report her to Border Patrol, though, unbeknownst to her, he had already done so. It’s been viewed over 47,000 times. Its top comment: “Illegals are our enemies , FLUSH them out or we are doomed.”

The Center for Immigration Studies, a think tank the Southern Poverty Law Center classified as an anti-immigrant hate group in 2016, posted the video to YouTube in 2011. But that designation didn’t stop YouTube’s Up Next from recommending it earlier this month after a search for “us house of representatives” conducted in a fresh search session with no viewing history, personal data, or browser cookies. YouTube’s top result for this query was a PBS NewsHour clip, but after clicking through eight of the platform’s top Up Next recommendations, it offered the Arizona rancher video alongside content from the Atlantic, the Wall Street Journal, and PragerU, a right-wing online “university.”

(The Center for Immigration Studies has denied that it promotes hate against immigrant groups, and it recently filed a lawsuit against the SPLC in federal court alleging that the nonprofit has been scheming to destroy CIS for two years. According to YouTube, the CIS video doesn’t violate its community guidelines; YouTube didn’t respond to questions about whether it considers CIS a hate group.)

That YouTube can be a petri dish of divisive, conspiratorial, and sometimes hateful content is well-documented. Yet the recommendation systems that surface and promote videos to the platform's users, the majority of whom report clicking on recommended videos, are frustratingly opaque. YouTube's Up Next recommendations are algorithmically personalized, the result of calculations that weigh keywords, watch history, engagement, and a proprietary slurry of other undisclosed data points. YouTube declined to provide BuzzFeed News with information about what inputs the Up Next algorithm considers, but said it’s been working to improve the experience for users seeking news and information.

“Over the last year we’ve worked to better surface news sources across our site for people searching for news-related topics,” a company spokesperson wrote via email. “We’ve changed our search and discovery algorithms to surface and recommend authoritative content and introduced information panels to help give users more sources where they can fact check information for themselves.”

To better understand how Up Next discovery works, BuzzFeed News ran a series of searches on YouTube for news and politics terms popular during the first week of January 2019 (per Google Trends). We played the first result and then clicked the top video recommended by the platform’s Up Next algorithm. We made each query in a fresh search session with no personal account or watch history data informing the algorithm, except for geographical location and time of day, effectively demonstrating how YouTube’s recommendation operates in the absence of personalization.

In the face of ongoing scrutiny from the public and legislators, YouTube has repeatedly promised to do a better job of policing hateful and conspiratorial content. Yet BuzzFeed News’ queries show the company’s recommendation system continues to promote conspiracy videos, videos produced by hate groups, and pirated videos published by accounts that YouTube itself sometimes bans. These findings — the product of 147 total “down the rabbit hole” searches for 50 unique terms, resulting in a total of 2,221 videos played — reveal little in the way of overt ideological bias.

But they do suggest that the YouTube users who turn to the platform for news and information — more than half of all users, according to the Pew Research Center — aren’t well served by its haphazard recommendation algorithm, which seems to be driven by an id that demands engagement above all else.

Help us reveal more stories like this. Become a BuzzFeed News member today.

One of the defining US political news stories of the first weeks of 2019 has been the partial government shutdown, now the longest in the country’s history. In searching YouTube for information about the shutdown between January 7 and January 11, BuzzFeed News found that the path of YouTube’s Up Next recommendations had a common pattern for the first few recommendations, but then tended to pivot from mainstream cable news outlets to popular, provocative content on a wide variety of topics.

After first recommending a few videos from mainstream cable news channels, the algorithm would often make a decisive but unpredictable swerve in a certain content direction. In some cases, that meant recommending a series of Penn & Teller magic tricks. In other cases, it meant a series of anti–social justice warrior or misogynist clips featuring conservative media figures like Ben Shapiro or the contrarian professor and author Jordan Peterson. In still other cases, it meant watching back-to-back videos of professional poker players, or late-night TV shows, or episodes of Lauren Lake’s Paternity Court. Here’s one example:

The first query for “government shutdown 2019 explained” returned a straightforward news clip titled “Partial US government shutdown expected to continue into 2019” from a channel with nearly 700,000 subscribers called Euronews.

Next, the recommendation algorithm suggested a live Trump press conference video from a news aggregation channel. From there, YouTube pushed videos about the Mexican border and Trump’s proposed border wall from outlets like USA Today and Al Jazeera English. Then, Up Next recommended a video from an immigration news aggregator featuring a compilation of news clips, titled “Border Patrol Arrests, Deportations, Border Wall And Mexico Sewage.”

From there, the algorithm took an abrupt turn, recommending a video about Miami International Airport, and then a series of episodes of a National Geographic TV show called Ultimate Airport Dubai — “Snakes” (382,007 views), “Firefighters” (179,471 views), “Customs Officers” (179,471 views), “Crystal Meth” (453,011 views), and “Faulty Planes” (241,290 views) — posted by a YouTube channel called Ceylon Aviator.

YouTube’s recommendation system can also lead viewers searching for news into a partisan morass of shock jocks and videos touting misinformation. Between January 7 and January 9, 2019, BuzzFeed News ran queries on the day’s most popular Google trending terms as well as major news stories, including Donald Trump’s primetime address on the border wall and the swearing in of the new members of the 116th Congress.

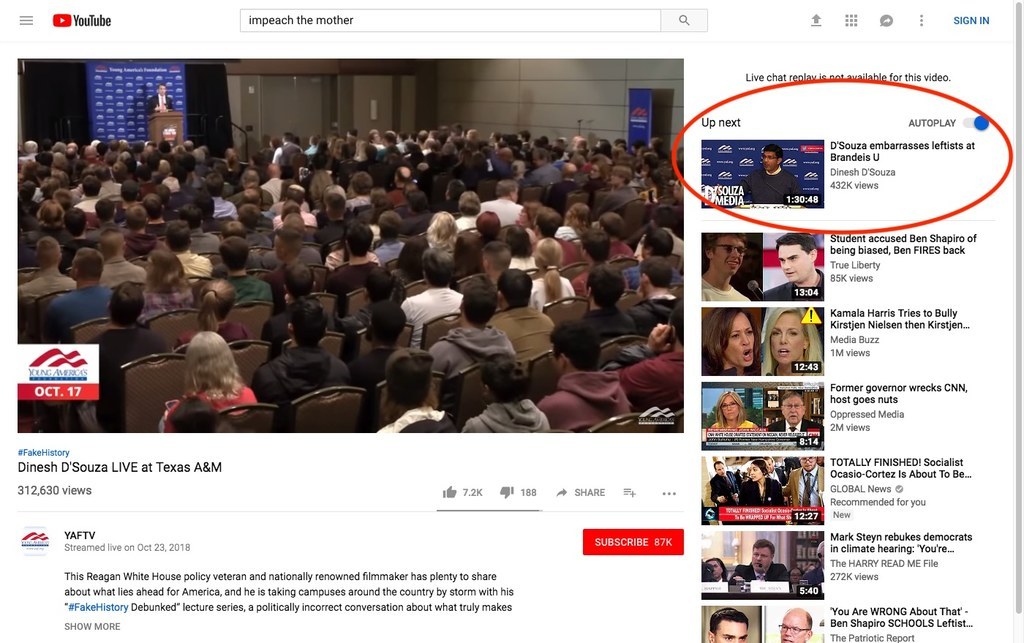

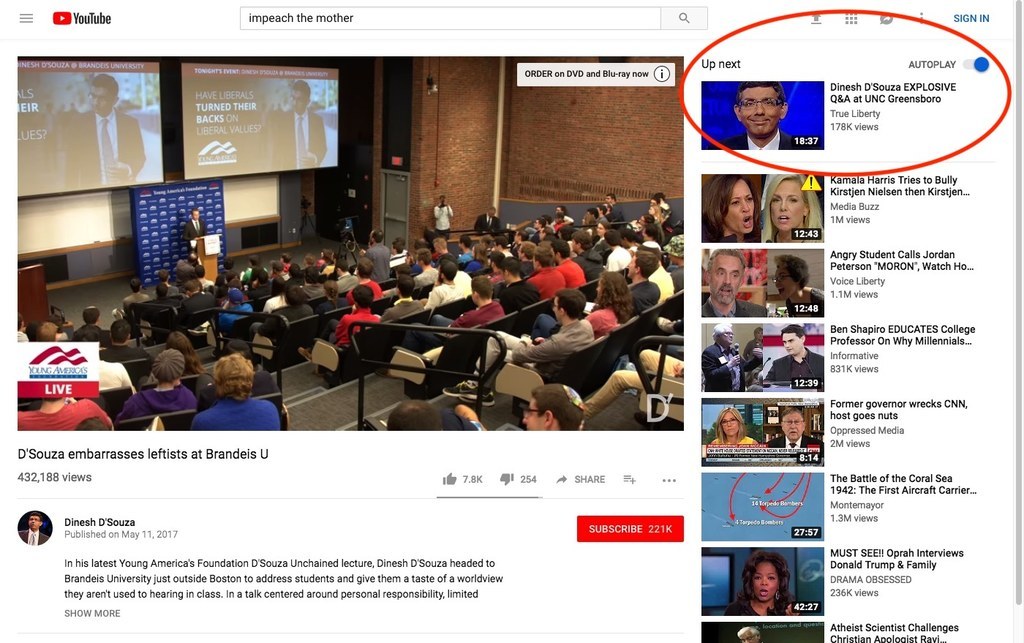

One query BuzzFeed News ran on the term “impeach the mother” — a reference to a remark by a newly sworn-in member of Congress regarding President Trump — highlighted Up Next’s ability to quickly jump to partisan content. The initial result for “impeach the mother” was a CNN clip of a White House press conference, after which YouTube Up Next recommended two more CNN videos in a row. From there, YouTube’s recommendations led to three more generic Trump press conference videos. At the sixth recommended video, the query veered unexpectedly into partisan territory with a clip from the conservative site Newsmax titled “Bill O’Reilly Explains Why Nancy Pelosi Will Fail as House Leader.” From there, the subsequent clips YouTube recommended escalated from Newsmax to increasingly partisan channels like YAFTV, pro-Trump media pundit Dinesh D’Souza’s channel, and finally channels like True Liberty and “TRUE AMERICAN CONSERVATIVES.”

Here are the videos, as recommended by Up Next:

- “Bill O’Reilly's Talking Points on Newsmax” (Newsmax TV)

- “Dinesh D’Souza LIVE at Texas A&M” (YAFTV)

- “D’Souza embarrasses leftists at Brandeis U” (D’Souza’s channel)

- “Dinesh D’Souza EXPLOSIVE Q&A at UNC Greensboro” (True Liberty)

- “(MUST WATCH) Dinesh D’souza HUMILIATES a student in a HEATED word Exchange” (True American Conservatives)

That YouTube’s recommendation algorithm occasionally pushed toward hyperpartisan videos during BuzzFeed News’ testing is concerning because people do use the platform to learn about current events. Compounding this issue is the high percentage of users who say they’ve accepted suggestions from the Up Next algorithm — 81%, according to Pew. One in five YouTube users between ages 18 and 29 say they watch recommended videos regularly, which makes the platform’s demonstrated tendency to jump from reliable news to outright conspiracy all the more worrisome.

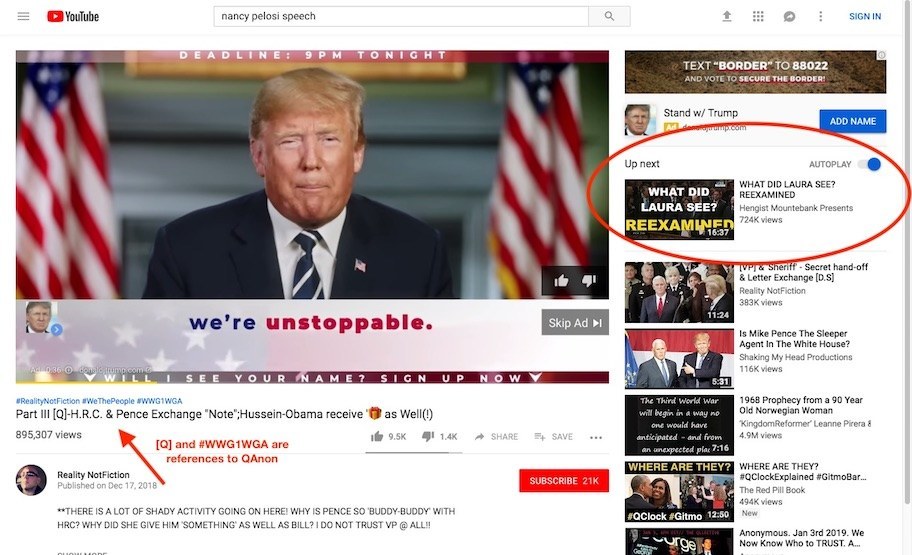

For example, here’s the list of consecutively recommended videos on one January 7 query for “nancy pelosi speech” that goes from a BBC News clip to a series of QAnon conspiracy videos after 10 jumps:

- “Pelosi quotes Reagan in Speaker remarks — BBC News” (22,475 views at the time of the experiment)

- “Watch the full, on-camera shouting match between Trump, Pelosi and Schumer” — Washington Post (5,314 Views)

- “Anderson Cooper exposes Trump’s false claims in cabinet meeting” — CNN (919,758 views)

- “President Trump Holds Press Conference 1/4/19” — Live On-Air News (210,047 views) [No longer available]

- “The Wall: A 2,000-mile border journey” — USA Today (414,019 views)

- “President Donald Trump’s border wall with Mexico takes shape” — CBC News (5,482 views)

- “9 Things That COULD Happen if Trump Builds ‘The Wall!’” — Pablito’s Way (8,071 views)

- “First Look at Trump’s Border Wall With Patrol Road on Top” — Hedgehog (1,545 views)

- “Why did Jeb Bush get Scared after He Saw The Note from Secret Service?” — Most News (1,594 views)

- “WHAT DID LAURA SEE? REEXAMINED” — Hengist Mountebank Presents (725,142 views)

- “Part III [Q]-H.R.C. & Pence Exchange "Note";Hussein-Obama receive '🎁' as Well(!)” — Reality NotFiction (895307 views; the video is tagged with the Qanon catchphrase “WWG1WGA”)

- “WHAT DID LAURA SEE? REEXAMINED” — Hengist Mountebank Presents (725,295 views) [Video was recommended a second time.]

- “Part III [Q]-H.R.C. & Pence Exchange ‘Note’;Hussein-Obama receive ‘🎁’ as Well(!)” — Reality NotFiction (895,307 views)

- “Will President GW Bush’s Funeral Be Next?” — Theresa (37,332 views)

- “Some participants of the Bush Funeral receive a shocking letter!” — The Unknown (146,189 views)

In an emailed statement, YouTube said “none of the videos mentioned by Buzzfeed violated [its] policies” but that it’s continuing to work to improve its recommendations on searches for news and science information. YouTube said it’s made changes intended to surface more reliable news content in search results and on its homepage. It also includes contextual information panels on some videos, including those posted by state-run media or about common conspiracies. Those panels did not appear on any of the videos BuzzFeed News shared with Google.

YouTube has admitted that its Up Next algorithm is imperfect and has promised to improve the quality of its recommendations, but these problems persist. In early 2018, after the US Senate began questioning Google executives about the platform’s possible role in Russian interference in the 2016 election, a spokesperson for YouTube told the Guardian, which was about to publish an investigation into YouTube’s recommendation system, “We know there is more to do here, and we’re looking forward to making more announcements in the months ahead.”

In March of that year, YouTube CEO Susan Wojcicki told Wired that the year and a half after Trump’s election had taught her “how important it is for us … to be able to deliver the right information to people at the right time. Now that’s always been Google’s mission, that’s what Google was founded on, and this year has shown it can be hard. But it’s so important to do that. And if we really focus, we can get it done.”

And, just last month, while testifying before Congress, Google CEO Sundar Pichai was asked about the promotion of conspiracy theories on YouTube. In response, Pichai said, “We are constantly undertaking efforts to deal with misinformation. We have clearly stated policies and we have made lots of progress in many of the areas where over the past year. … We are looking to do more.”

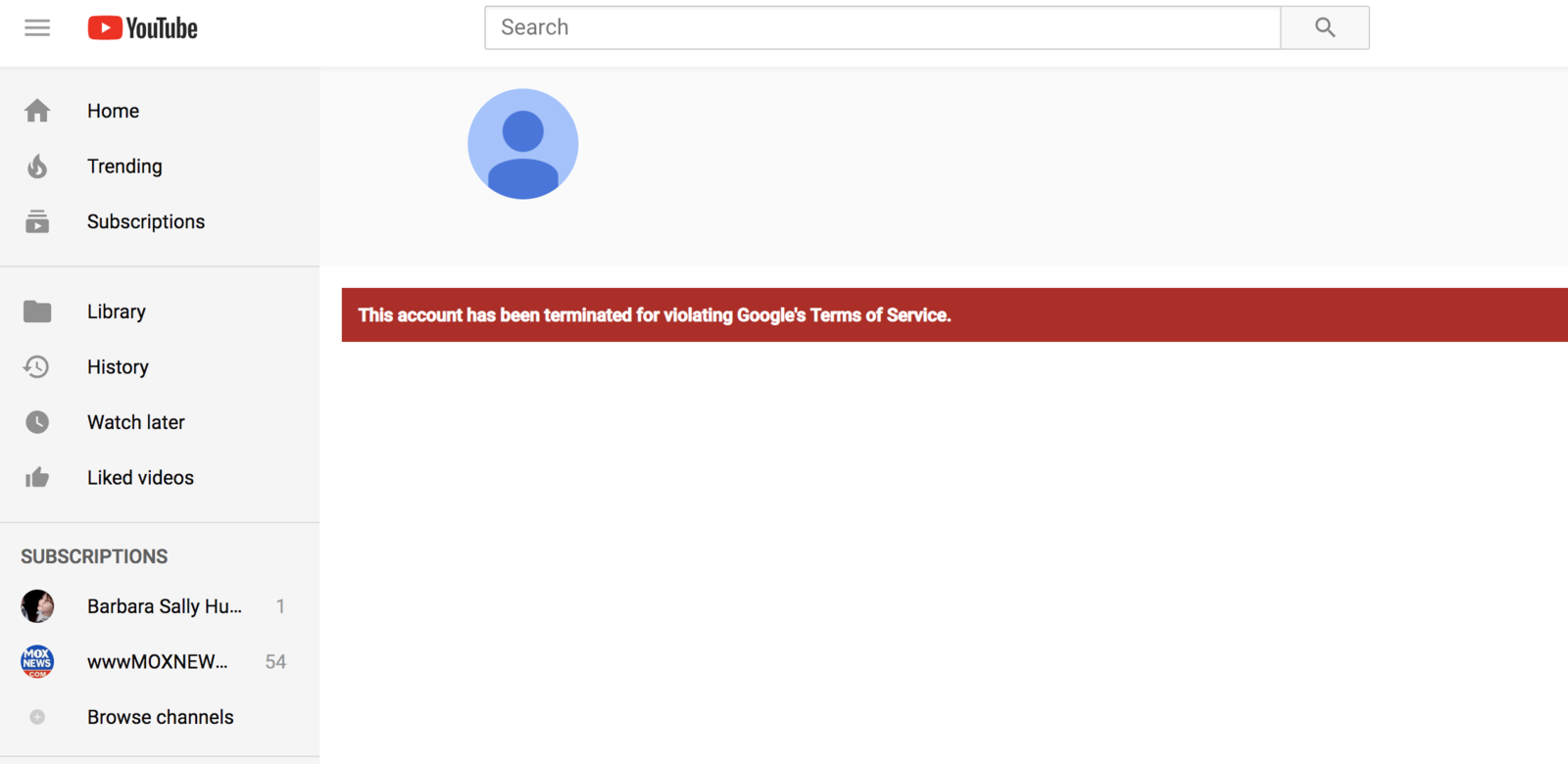

But by the beginning of 2019, even regulating pirated content still seems to flummox YouTube’s recommendation system. For example, on January 7, after searches for terms including “why is the government shut down,” “government shutdown explained,” and “government shutdown,” in fresh sessions not tied to any personal data, one of the videos YouTube most commonly recommended after a few clicks was titled “Joe BRILLIANT HUMILIATES Trump After He Facts Checking Trump’s False Border Wall Claims.”

That video, which had 96,763 views by 7:10 p.m. PT on January 7, consisted only of pirated footage from a January episode of MSNBC’s Morning Joe. It was posted to a channel called Ildelynn Basubas that had once exclusively posted videos of nail art, until it abruptly pivoted to posting ripped videos of cable TV shows with incendiary headlines. Ildelynn Basubas’s videos were among those most frequently recommended by YouTube following searches for government shutdown–related terms on January 7. However, less than two days later, YouTube had removed the channel entirely “for violating Google’s Terms of Service.”

BuzzFeed News / Via YouTube.com

Similarly, footage of popular CNN shows — with thousands of views — posted by the channel Shadowindustry were recommended repeatedly by YouTube during BuzzFeed News’ queries. For example, when we searched “116th congress swearing in” and followed YouTube’s recommendations 14 jumps, CNN’s video “CNN reporter presses Trump: You promised Mexico would pay for wall” appeared in the Up Next recommended video column a total of 10 times, while a ripped video of CNN’s Anderson Cooper 360° posted by Shadowindustry was recommended a total of 14 times. Despite YouTube’s initial algorithmic push for the channel, it had terminated Shadowindustry within just a couple days.

Given the plethora of conspiracies and hate group content on its platform, pirated cable news content is perhaps the least of YouTube’s problems. The platform does, at least, seem capable of finding and removing it — though not, it would seem, until after its own recommendation system has helped these videos accrue tens of thousands of views. Clearly, the people behind these channels have figured out how to game YouTube’s recommendation algorithm faster than YouTube can chase them down, leading the company to recommend their pirated videos before imminently deleting them.

Researchers have described YouTube as “the great radicalizer.” After the 2017 Las Vegas shooting, the site consistently recommended conspiracy theories to users searching mundane terms like “Las Vegas shooting.” Similarly, a 2017 report from the Guardian illustrated that YouTube reliably pushed users toward conspiratorial political videos. And according to a recent report, just weeks before the 2018 midterm elections, far-right reactionaries were able to “hijack” search terms in order to manipulate YouTube’s algorithms so that queries for popular terms dredge up links to reactionary content.

In some cases, queries run by BuzzFeed News support the claims of past reports. And yet other queries — run on the same day with the same search terms under the same conditions — offer different, more mundane results. Attempts to fully understand YouTube’s recommendation algorithms are complicated by the fact that each viewer’s experience is not only unique, but tailored to their specific online experience. No two users watch the same videos, nor do they watch them the same way, and there may be no way to reliably chart a “typical” viewer experience. YouTube’s rationale when deciding what content to show its viewers is frustratingly inscrutable.

But as demonstrated by BuzzFeed News’ more than 140 journeys through YouTube’s recommendation system, the outcome of that decision-making process can be difficult to reverse engineer. In the end, what’s clear is that YouTube’s recommendation algorithm isn’t a partisan monster — it’s an engagement monster. It’s why its recommendations veer unpredictably from cable news to pirated reality shows to QAnon conspiracy theories. Its only governing ideology is to wrangle content — no matter how tenuously linked to your watch history — that it thinks might keep you glued to your screen for another few seconds.

This story is a collaboration with the BuzzFeed News Tech Working Group.